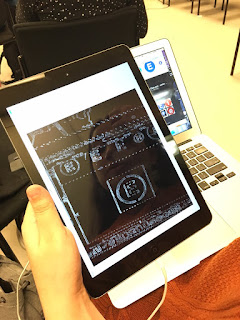

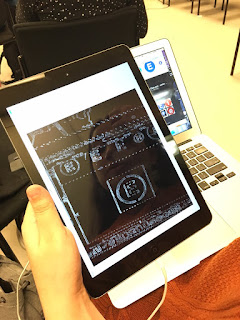

The iPad can now successfully recognize the 1 and 2 trains through white pixel count. As of right now, the train's recognition shows up only in our Xcode Project. Hopefully by our presentation we will be able to have the recognized train number appear on the iPad in order for VoiceOver to read the train number aloud. A demo is attached.

Wednesday, June 22, 2016

Progress Report 5/31/16

The iPad can now successfully recognize the 1 and 2 trains through white pixel count. As of right now, the train's recognition shows up only in our Xcode Project. Hopefully by our presentation we will be able to have the recognized train number appear on the iPad in order for VoiceOver to read the train number aloud. A demo is attached.

Thursday, May 5, 2016

Progress Report 4/5/16

While still trying to find the function of OpenCV that counts the number of white pixels within our camera's frame, we have started research on VoiceOver and how we can incorporate it into our application. After testing the VoiceOver feature provided on the iPad (and other apple products), VoiceOver luckily has begun to detect features within our application (such as the start button that is present on the opening page of our application). We eventually hope for VoiceOver to be able to read aloud the numbers/letters/etc. that our camera detects.

Tuesday, April 19, 2016

Progress Report Due: 4/17/16

While Leslie finishes up normalizing the frame (through dividing the number of pixels by either the diameter or the radius), I have started research on VoiceOver within applications. We hope to focus on VoiceOver once the frame is normalized. As of now we have decided to move past textual recognition, moving it to the future (if our app still needs it then), once we implement (or at least gain a better understanding of) VoiceOver.

Thursday, April 14, 2016

Progress Report Due: 4/10/16

Progress:

Focus/Problem:

This week, our project made progress regarding circle detection, as mentioned in the earlier post. Now, the application's camera masks every portion of the frame except for the detected circle and the area within it. White pixels trace the circumference of the circle and an additional white pixel detects the center of the circle.

Target Items for the Upcoming Week:

While progress was made, we now plan to focus on stabilizing the detection. Upon the camera detecting the circle, the frame appears in and out rapidly. Mr. Lin suggested we go about this stabilization by dividing the number of pixels of the edge by the radius or the diameter of the circle (since edge detection is a linear feature and one-dimensional).

Focus/Problem:

This week, our project made progress regarding circle detection, as mentioned in the earlier post. Now, the application's camera masks every portion of the frame except for the detected circle and the area within it. White pixels trace the circumference of the circle and an additional white pixel detects the center of the circle.

Target Items for the Upcoming Week:

While progress was made, we now plan to focus on stabilizing the detection. Upon the camera detecting the circle, the frame appears in and out rapidly. Mr. Lin suggested we go about this stabilization by dividing the number of pixels of the edge by the radius or the diameter of the circle (since edge detection is a linear feature and one-dimensional).

Thursday, April 7, 2016

Circle Detection Progress

Summary:

Today in class, the circle detection FINALLY started to work to our advantage. When we started working with circle detection, our goal was for our application's camera to detect circles within view and block out all other objects the camera sees. As of today, our application can do so! Although, we do have to fix minor errors (stabilizing the camera's focus on the circle for instance).

This is the portion of code that was altered in class today:

Outcome:

Today in class, the circle detection FINALLY started to work to our advantage. When we started working with circle detection, our goal was for our application's camera to detect circles within view and block out all other objects the camera sees. As of today, our application can do so! Although, we do have to fix minor errors (stabilizing the camera's focus on the circle for instance).

This is the portion of code that was altered in class today:

// if(Newimage.empty()){

cv::Mat mask = cv::Mat::zeros( image.rows, image.cols, CV_8UC1 );

circle( mask, center, radius, Scalar(225,205,205), -1, 8, 0 ); //-1 means filled

image.copyTo( dst, mask ); // copy values of img to dst if mask is > 0.

image=dst;

Tuesday, April 5, 2016

Progress Report Due: 4/3/16

*Presentation week.*

Notes from other presentations:

Team 2:

-Halt in compound eye simulation frame.

-Artificial Intelligence that learns from past experiences. (Similar to statistics and data mining).

-Machine Learning method he is using: Regression Basics (Using a data set to predict values through line of best fit).

Residual sum of squares (RSS): Difference between actual and predicted values.

-Apply regression method to object tracking, working on the drone's movement in three-dimensional space (space is captured in two dimension).

Steps after...

1) Integrate object tracking codes with machine learning algorithms.

2) Create data set: Position drone around the room on a coordinate plane.

3) Predict drone's location in a three-dimensional space.

Team 5: Hand-to-Hand Communication

-Using EMG signals from their arms to mimic human body movements.

-Data Acquisition Recap: 1 Muscle, 2 Electrodes at a time. (Muscles completed: Extensor digitorum, extensor pollicis brevis)

-Finding the "peaks of the data": the local maximums (highest values) of each finger movement. Difficult to do when an entire signal is recorded because information is not accurate, but instead choppy.

-Graphed the peaks in excel and numbered them. (A is where range starts, B is where range ends).

-Alternate strategy to project... Xcode. Using Xcode they would put the data into a column and the software automatically detects maximums.

-With all five fingers it is still hard to differentiate between the fingers, besides the thumb.

-Use planes to separate the data possibly (alternative).

Team 8: Educational Mobile Apps for the Visually Impaired:

-Senseg Tablet: Register the Device, Set up the Environment, Open the Stand-alone SDK Manager on an existing project (in Android Studio), launch the SDK manager, download the Senseg SDK from the 'Extras' folder.

-Import Haptic feedback package from the SDK, declare context, attribute set, and content in Java format, create an if/else statement.

-Code allows for Gabi & Lola to set the level of intensity and the area of which the haptic feedback covers.

-They'd like to add edges in order to enhance the feel of haptic feedback. By defining the edges, the haptic feedback is more obvious to the user.

-Future Goals: VoiceOver, Edges (to enhance the Haptic Feedback), Circles

Team 4: Brainwave Controlled Games/Devices: OpenVIBE:

-Stages of running a successful BCI scenario: Brainwave acquisition, signal processing/filtering, feature extraction, classification, online testing.

-Brainwave Acquisition (using the NeuroSky Mindwave Headset with USB.

-Temporal Filtering (a Plugin box used to filter the input signal (raw data) to extract a specific range of data).

-Raw data is used for the separation of data based on frequency bands.

-Time Based Epoching: Generates signal 'slices' or 'blocks' having a specified duration and interval (i.e. if calculations are made every 1 second, 1 value is provided per 1 second).

-Simple DSP: Box used to apply mathematical formulas to a data set (ex. Log(1+x) = Logarithmic Bandpower)

-Classification Algorithms: Each coordinate is a point on two dimensional space (when you think left, the alpha and beta waves behave differently compared to when you think right. Points will cluster differently in two-dimensional spaces).

-SVM Anaysis: Support Vector Machine (finds the line (hyperplane) that is oriented so that the margin between the support vectors are maximized.

Team 6: The Forefront of EEG Research:

-Electroencephalography provides a new insight to how humans work.

-Invasive vs. Non-Invasive

-Invasive: Electrodes are placed within the scalp- records specific areas of interest and receives a small amount of noise, surgery is required, long & costly process.

-Non-Invasive: Electrodes are placed along the outside of the scalp, larger amount of noise and less specific, arrangement of electrodes.

-Hans Berger: Created the first non-invasive for humans.

-Brainwaves provide context for data found by users, visualizes states of mind and classification of the waves can be utilized for projects, applications, and research.

-Medical use for EEG: Provides solutions and methods to overcome mental and physical obstacles (i.e. of a mental disorder ADHD).

-Educational use for EEG: educates both yourself and those around you on the processes of the brain, helps as a learning tool for classrooms and tutoring, gathers data to get a broad picture of all brains (similar project- the Human Genome Project).

-Recreational use for EEG: The brain becomes a new kind of controller and provides opportunities for new kinds of gamers.

-Baseline Scan: Like a control group in order to see if high beta waves are your natural state for instance, in which they'll base their algorithms off of.

-Emotiv: Founded in 2011, Emotiv is a company that provided information on the Epoch headset that has contributed to her research thus far.

-The Emotiv Forum has been a place where she has found others working in the same area with questions similar to those of her own.

Team 10: M

ulti-Pixel Display Design:

-Designing a Printable Circuit Board (PCB: An electronic circuit with small strips of conducting material (copper)).

-Using a PCB as a prototype for finger sensing experiment (they need to find out size & voltage).

-In order to design the layout they have to calculate (widths/lengths of pixels, number of pads in a column) and choose shapes for experiment. Then, they draw this by creating a 12 by 12 pad with 1mm by 1mm as widths for the pixels and then erasing unnecessary connections to focus on the shapes.

-Important Factors: Touch Sensitivity (only using a fingertip to feel, control over voltage), minimum width ( 1mm by 1mm per pixel), standard trace width = 0.5 mm, with changing widths, keep same spacing length, shape (see how many pads one row can fit).

-Issues: Users might feel the electrovibration produced by the lines between the pixels (Potential Solution: Eventually they'll be able to use a double-sided PCB so that the pixels will be connected through the board instead of between pixels), the shapes may be too similar to each other to be distinctly recognizable, and users will not know where to move their fingers.

-Process: 1) Designing 2) Printing 3) Etching

Video Games for Autistic Children:

-Integrate the functionalities of a video game with specific functions that help autistic children learn certain social skills.

-Acquired Knowledge: Create website, add music files, import image files into the game, using tiled.

-Importing Images into Processing: 1) Format .PNG files into grid. 2) Assign values to each 16x16 grid space (a. for the sprites, each image is given a name) 3) Functions are called to draw each image under certain conditions. 4) The function loads images from each file sequentially. 5) When the images are drawn the size is doubled.

-Classes: Ways to create a certain function, that can be called in to draw or do something. What makes a class special is that it helps facilitate the whole coding process, due to it's unique function that can alter based upon which values you set.

-Arrays: sequences of objects of the same data type. All the elements of an array are either of type int (whole numbers), or all of them are of type char)

Image Processing to Identify Junctions & Lines:

1) A. Guiding System (Path is marked on the floor, drone uses down-facing camera)

B. Identify Lines (I. Thresholding, II. Creating Blob Data, III. Get Blob Data (center: get location of each pixel, average the locations) (radial difference: draw radii to edge pixels, find greatest and least radii, calculate difference between the two radii) (heading: calculate angle of longest radius arctan y/x) (heading: calculate angle of longest radius arctan y/x) IV. Choose Blob with largest radial difference (turn parallel to the line, then move until directly over it).

C. Switching Lines

D. Location= # of junctions passed

E. User Sets Path at the Beginning (Path: pi/2)

Notes from other presentations:

Team 2:

-Halt in compound eye simulation frame.

-Artificial Intelligence that learns from past experiences. (Similar to statistics and data mining).

-Machine Learning method he is using: Regression Basics (Using a data set to predict values through line of best fit).

Residual sum of squares (RSS): Difference between actual and predicted values.

-Apply regression method to object tracking, working on the drone's movement in three-dimensional space (space is captured in two dimension).

Steps after...

1) Integrate object tracking codes with machine learning algorithms.

2) Create data set: Position drone around the room on a coordinate plane.

3) Predict drone's location in a three-dimensional space.

Team 5: Hand-to-Hand Communication

-Using EMG signals from their arms to mimic human body movements.

-Data Acquisition Recap: 1 Muscle, 2 Electrodes at a time. (Muscles completed: Extensor digitorum, extensor pollicis brevis)

-Finding the "peaks of the data": the local maximums (highest values) of each finger movement. Difficult to do when an entire signal is recorded because information is not accurate, but instead choppy.

-Graphed the peaks in excel and numbered them. (A is where range starts, B is where range ends).

-Alternate strategy to project... Xcode. Using Xcode they would put the data into a column and the software automatically detects maximums.

-With all five fingers it is still hard to differentiate between the fingers, besides the thumb.

-Use planes to separate the data possibly (alternative).

Team 8: Educational Mobile Apps for the Visually Impaired:

-Senseg Tablet: Register the Device, Set up the Environment, Open the Stand-alone SDK Manager on an existing project (in Android Studio), launch the SDK manager, download the Senseg SDK from the 'Extras' folder.

-Import Haptic feedback package from the SDK, declare context, attribute set, and content in Java format, create an if/else statement.

-Code allows for Gabi & Lola to set the level of intensity and the area of which the haptic feedback covers.

-They'd like to add edges in order to enhance the feel of haptic feedback. By defining the edges, the haptic feedback is more obvious to the user.

-Future Goals: VoiceOver, Edges (to enhance the Haptic Feedback), Circles

Team 4: Brainwave Controlled Games/Devices: OpenVIBE:

-Stages of running a successful BCI scenario: Brainwave acquisition, signal processing/filtering, feature extraction, classification, online testing.

-Brainwave Acquisition (using the NeuroSky Mindwave Headset with USB.

-Temporal Filtering (a Plugin box used to filter the input signal (raw data) to extract a specific range of data).

-Raw data is used for the separation of data based on frequency bands.

-Time Based Epoching: Generates signal 'slices' or 'blocks' having a specified duration and interval (i.e. if calculations are made every 1 second, 1 value is provided per 1 second).

-Simple DSP: Box used to apply mathematical formulas to a data set (ex. Log(1+x) = Logarithmic Bandpower)

-Classification Algorithms: Each coordinate is a point on two dimensional space (when you think left, the alpha and beta waves behave differently compared to when you think right. Points will cluster differently in two-dimensional spaces).

-SVM Anaysis: Support Vector Machine (finds the line (hyperplane) that is oriented so that the margin between the support vectors are maximized.

Team 6: The Forefront of EEG Research:

-Electroencephalography provides a new insight to how humans work.

-Invasive vs. Non-Invasive

-Invasive: Electrodes are placed within the scalp- records specific areas of interest and receives a small amount of noise, surgery is required, long & costly process.

-Non-Invasive: Electrodes are placed along the outside of the scalp, larger amount of noise and less specific, arrangement of electrodes.

-Hans Berger: Created the first non-invasive for humans.

-Brainwaves provide context for data found by users, visualizes states of mind and classification of the waves can be utilized for projects, applications, and research.

-Medical use for EEG: Provides solutions and methods to overcome mental and physical obstacles (i.e. of a mental disorder ADHD).

-Educational use for EEG: educates both yourself and those around you on the processes of the brain, helps as a learning tool for classrooms and tutoring, gathers data to get a broad picture of all brains (similar project- the Human Genome Project).

-Recreational use for EEG: The brain becomes a new kind of controller and provides opportunities for new kinds of gamers.

-Baseline Scan: Like a control group in order to see if high beta waves are your natural state for instance, in which they'll base their algorithms off of.

-Emotiv: Founded in 2011, Emotiv is a company that provided information on the Epoch headset that has contributed to her research thus far.

-The Emotiv Forum has been a place where she has found others working in the same area with questions similar to those of her own.

Team 10: M

ulti-Pixel Display Design:

-Designing a Printable Circuit Board (PCB: An electronic circuit with small strips of conducting material (copper)).

-Using a PCB as a prototype for finger sensing experiment (they need to find out size & voltage).

-In order to design the layout they have to calculate (widths/lengths of pixels, number of pads in a column) and choose shapes for experiment. Then, they draw this by creating a 12 by 12 pad with 1mm by 1mm as widths for the pixels and then erasing unnecessary connections to focus on the shapes.

-Important Factors: Touch Sensitivity (only using a fingertip to feel, control over voltage), minimum width ( 1mm by 1mm per pixel), standard trace width = 0.5 mm, with changing widths, keep same spacing length, shape (see how many pads one row can fit).

-Issues: Users might feel the electrovibration produced by the lines between the pixels (Potential Solution: Eventually they'll be able to use a double-sided PCB so that the pixels will be connected through the board instead of between pixels), the shapes may be too similar to each other to be distinctly recognizable, and users will not know where to move their fingers.

-Process: 1) Designing 2) Printing 3) Etching

Video Games for Autistic Children:

-Integrate the functionalities of a video game with specific functions that help autistic children learn certain social skills.

-Acquired Knowledge: Create website, add music files, import image files into the game, using tiled.

-Importing Images into Processing: 1) Format .PNG files into grid. 2) Assign values to each 16x16 grid space (a. for the sprites, each image is given a name) 3) Functions are called to draw each image under certain conditions. 4) The function loads images from each file sequentially. 5) When the images are drawn the size is doubled.

-Classes: Ways to create a certain function, that can be called in to draw or do something. What makes a class special is that it helps facilitate the whole coding process, due to it's unique function that can alter based upon which values you set.

-Arrays: sequences of objects of the same data type. All the elements of an array are either of type int (whole numbers), or all of them are of type char)

Image Processing to Identify Junctions & Lines:

1) A. Guiding System (Path is marked on the floor, drone uses down-facing camera)

B. Identify Lines (I. Thresholding, II. Creating Blob Data, III. Get Blob Data (center: get location of each pixel, average the locations) (radial difference: draw radii to edge pixels, find greatest and least radii, calculate difference between the two radii) (heading: calculate angle of longest radius arctan y/x) (heading: calculate angle of longest radius arctan y/x) IV. Choose Blob with largest radial difference (turn parallel to the line, then move until directly over it).

C. Switching Lines

D. Location= # of junctions passed

E. User Sets Path at the Beginning (Path: pi/2)

Progress Report Due: 3/27/16

Progress Report Due: 3/27/16

Progress:

Focus/Problem:

We are still in the process of adding a circle detection feature into our code. Through this, we plan for our app's camera to mask circles within range, so that all objects outside the circle are not shown. By doing this, we can focus the app's camera on a smaller portion of the frame, making it easier to eventually recognize numbers and letters within the circles.

As of right now, the application has detected the circle and has found the circle's center. Although, it is very glitchy. The circle's outline and center will not remain steady.

This upcoming week we will be focusing on our area of study/progress presentation.

Target Items for the Upcoming Week:

While we need to advance our project, this week's focus is our area of study/progress presentation. Through this presentation, we plan to inform our classmates on the circle detection feature in Xcode titled "Hough Transform." We will additionally be discussing how we used the feature in our code and how it is beneficial to our application.

Equipments Required:

-Mac Laptops

-Mr. Lin's iPad & Chord

-Large circles to verify the app recognizes what we want it to recognize.

-Presentation practice with the eno board.

Thursday, March 31, 2016

Progress Report Due: 3/20/16

Progress:

Focus/Problem:

We are still in the process of adding a circle detection feature into our code. Through this, we plan for our app's camera to mask circles within range, so that all objects outside the circle are not shown.

This is the portion of our code where the circle detection has been placed:

As of right now, Xcode has not provided us a particular issue that needs fixing. The code builds as successful, but it is not working properly upon opening the application on the iPad.

Target Items for the Upcoming Week:

We will need to search for the part in the code that is causing this glitch. We will also need to camera mask the circle so that the camera's focus is solely on the circle and what is inside of it. Particularly, we need to focus on is our "if" statement within our code. Since we have to write the "if" statement ourselves, we are currently conducting more research as to how we are supposed to solve this issue.

Equipments Required:

-Mac Laptops

-Mr. Lin's iPad & Chord

-Large circles to verify the app recognizes what we want it to recognize.

Focus/Problem:

We are still in the process of adding a circle detection feature into our code. Through this, we plan for our app's camera to mask circles within range, so that all objects outside the circle are not shown.

This is the portion of our code where the circle detection has been placed:

/// Apply the Hough Transform to find the circles

HoughCircles( src_gray, circles, CV_HOUGH_GRADIENT, 1, src_gray.rows/8, 200, 100, 0, 0 );

for( size_t i = 0; i < circles.size(); i++ )

{

cv::Point center(cvRound(circles[i][0]), cvRound(circles[i][1]));

int radius = cvRound(circles[i][2]);

// circle center

circle( image, center, 3, Scalar(0,255,0), -1, 8, 0 );

// circle outline

circle( image, center, radius, Scalar(0,0,255), 3, 8, 0 );

Mat canny_output;

//int x;

// int y;

int xc = cvRound(circles[i][0]);

int yc = cvRound(circles[i][1]);

for (int x = xc-radius; x < x+radius; x ++)

for (int y = yc-radius; y < y+radius; y ++){

if(x-(cvRound(circles[i][0])-(

{

vector<vector<cv::Point> > contours;

vector<Vec4i> hierarchy;

/// Detect edges using canny

Canny( image, canny_output, thresh, thresh*2, 3 );

/// Find contours

findContours( canny_output, contours, hierarchy, CV_RETR_TREE, CV_CHAIN_APPROX_SIMPLE, cv::Point(0, 0) );

image=canny_output;

As of right now, Xcode has not provided us a particular issue that needs fixing. The code builds as successful, but it is not working properly upon opening the application on the iPad.

Target Items for the Upcoming Week:

We will need to search for the part in the code that is causing this glitch. We will also need to camera mask the circle so that the camera's focus is solely on the circle and what is inside of it. Particularly, we need to focus on is our "if" statement within our code. Since we have to write the "if" statement ourselves, we are currently conducting more research as to how we are supposed to solve this issue.

Equipments Required:

-Mac Laptops

-Mr. Lin's iPad & Chord

-Large circles to verify the app recognizes what we want it to recognize.

Tuesday, March 22, 2016

Seminar (3/24 & 3/29) Abstract

We will be talking about the OpenCV function, "HoughCircles" and how it pertains to our project. We will inform our classmates on the essential function of HoughCircles and how the algorithm works to provide computer vision applications with circle detection. Additionally, we will briefly talk about our experience with HoughCircles and the difficulties we have encountered so far. We will briefly show how we have included the algorithm in our code. Our focus will mainly be placed on how the function will help us in developing our application in addition to explaining the algorithm to those unfamiliar to it. A draft of our first slide is shown below.

Thursday, March 17, 2016

Progress Report Due: 3/13/16

Progress:

Focus/Problem:

We are still in the process of adding a circle detection feature into our code. Through this, we plan for our app's camera to mask circles within range, so that all objects outside the circle are not shown. By doing this, we can focus the app's camera on a smaller portion of the frame, making it easier to eventually recognize numbers and letters within the circles.

As of right now, the application has detected the circle and has found the circle's center. Although, it is very glitchy. The circle's outline and center will not remain steady.

Target Items for the Upcoming Week:

We will need to search for the part in the code that is causing this glitch. We will also need to camera mask the circle so that the camera's focus is solely on the circle and what is inside of it. While circle detection will be beneficial to us if it succeeds as we intend, if we can implement text recognition we can start working with VoiceOver (to relay the detected signs back to the app user). Eventually we will also need to incorporate the red color detection code (that succeeded earlier) with this code once completed, so that the app's camera can mask colors and detect circles simultaneously.

Equipments Required:

-Mac Laptops

-Mr. Lin's iPad & Chord

-Large circles to verify the app recognizes what we want it to recognize.

Progress Report Due: 3/6/16

Progress Report Due: 3/6/16

Progress:

Focus/Problem:

We are in the process of adding a circle detection feature into our code. Through this, we plan for our app's camera to mask circles within range, so that all objects outside the circle are not shown. By doing this, we can focus the app's camera on a smaller portion of the frame, making it easier to eventually recognize numbers and letters within the circles.

As of right now though, the app's camera only detects edges (detecting any edge within range).

Target Items for the Upcoming Week:

Further our implementation of circle detection in order to go back and direct our focus on text recognition. While circle detection will be beneficial to us if it succeeds as we intend, if we can implement text recognition we can start working with VoiceOver (to relay the detected signs back to the app user). Eventually we will also need to incorporate the red color detection code (that succeeded earlier) with this code once completed, so that the app's camera can mask colors and detect circles simultaneously.

Equipments Required:

-Mac Laptops

-Mr. Lin's iPad & Chord

-Large circles to verify the app recognizes what we want it to recognize. No red items are needed right now since we have temporarily removed the color detection code out of our own code, in order to focus on circle detection. Eventually we hope to tie these two codes together.

Progress:

Focus/Problem:

We are in the process of adding a circle detection feature into our code. Through this, we plan for our app's camera to mask circles within range, so that all objects outside the circle are not shown. By doing this, we can focus the app's camera on a smaller portion of the frame, making it easier to eventually recognize numbers and letters within the circles.

As of right now though, the app's camera only detects edges (detecting any edge within range).

Target Items for the Upcoming Week:

Further our implementation of circle detection in order to go back and direct our focus on text recognition. While circle detection will be beneficial to us if it succeeds as we intend, if we can implement text recognition we can start working with VoiceOver (to relay the detected signs back to the app user). Eventually we will also need to incorporate the red color detection code (that succeeded earlier) with this code once completed, so that the app's camera can mask colors and detect circles simultaneously.

Equipments Required:

-Mac Laptops

-Mr. Lin's iPad & Chord

-Large circles to verify the app recognizes what we want it to recognize. No red items are needed right now since we have temporarily removed the color detection code out of our own code, in order to focus on circle detection. Eventually we hope to tie these two codes together.

Monday, February 29, 2016

Progress Report Due: 2/28/16

Progress Report Due: 2/28/16

Progress:

Focus/Problem:

Focus/Problem:

This week Mr. Lin introduced an online pamphlet to us that contains an algorithm for circle detection. After talking, we decided alongside Mr. Lin that since we've had difficulty with text recognition we will start with circle detection. Doing so will mask what the camera sees aside from the circles it detects. With our app being able to detect both color and circles, we are making the area in which the camera would be able to track text, hopefully easier to detect since the range in which it would be searching for text would be much smaller.

Target Items for the Upcoming Week:

Complete circle detection in order to go back and direct our focus on text recognition. While circle detection will be beneficial to us if it succeeds as we intend, if we can implement text recognition we can start working with VoiceOver (to relay the detected signs back to the app user).

Equipments Required:

-Mac Laptops

-Mr. Lin's iPad & Chord

-Large numbers/ circles to verify the app recognizes what we want it to recognize (A variation of circles and numbers, some that are not red obviously, in order to make sure it's the text recognition that is detecting the numbers not just the red color recognition).

Progress Report Due 2/14/16

Progress Report Due: 2/14/16

Progress:

Point of focus: While we have masked the camera's focus to see only the color red, we are trying to get the camera to recognize/track numbers and characters in order to further decipher between subway sign circles that have the same color yet stand for seperate trains (i.e. the 4,5,6 line is all in green yet the trains are different).

Problem:

Difficulties Encountered/Plans for the Coming Week

While searching for an optical character recognition software that enables recognition within coding, many of the softwares are only compatible with softwares like Tesseract, not XCode. We will have to detect the algorithms and alter them ourselves in order for them to suit our XCode platform.

-Tackle the way through which we want to recognize text (through altering an algorithm, finding contours, etc.)

Equipments Required:

-Mac Laptops

-Mr. Lin's iPad & Chord

-Numbers/Text (some red, some not in order for us to verify it's the text recognition that is identifying the numbers not solely the red color detection).

Monday, February 8, 2016

Progress Report Due: 2/7/16

Progress Report Due: 2/7/16

Progress:

Tasks Accomplished:

Tasks Accomplished:

Now that our app's camera can mask what it sees, in order to just see particular colors, we are now focused on finding factors of similar colored items that differ from one another; numbers. This past week Leslie successfully outlined objects of our app's camera, which is the first step in many number recognition algorithms. Over the weekend, Kate began a tutorial (http://docs.opencv.org/2.4.2/doc/tutorials/imgproc/shapedescriptors/find_contours/find_contours.html) for finding contours in OpenCV. "Finding contours" Is the first step in an algorithm she has chosen to follow for detecting numbers (http://stackoverflow.com/questions/10776398/extracting-numbers-from-an-image-using-opencv being one of the places such algorithm is found).

Problem:

Difficulties Encountered/Plans for the Coming Week

As stated above, this past week Leslie successfully outlined objects of our app's camera, which is the first step in many number recognition algorithms. Although, this was done by taking out the masking of what our camera sees aside from the color red. In the coming week it is important we work on implementing both codes simultaneously into our app.

-Complete Number Recognition before break.

-Do more research on how our app plans to internally process the numbers it detects and how it plans to use such information.

Equipments Required:

-Mac Laptops

-Mr. Lin's iPad & Chord

-Red Objects (Solo Cup, Subway Sign, etc.)

Monday, February 1, 2016

Progress Report Due: 1/31/16

Progress:

Tasks Accomplished:

Tasks Accomplished:

By the end of the STEM Hackathon, our app's camera successfully masked what it saw, singling out only the tracked color red (since red is the color implemented in our code right now).

We tested the camera out on a red solo cup and the "3" Train's subway sign and got the following results:

Problem:

Difficulties Encountered:

-It has taken a few weeks to resolve this issue. Originally, we had tried to convert what the camera saw from BGR to HSV. What we realized at the Hackathon though is that we could have kept Mr. Lin's original code that allowed the camera to see through binary image.

Equipments Required:

Equipments Required:

-Mac Laptops

-Mr. Lin's iPad & Chord

-Red Objects (Solo Cup, Subway Sign, etc.)

- Plan:

Proposals/Steps to Attack the Problems/Action Items for the Coming Week:

-Get our application to track more colors (green, blue) and start to focus on how we can decipher between subway symbols that hold the same color yet stand for different trains (Ex: the 1,2,3, line shares the same color, red), this could mean focusing on text recognition.

Experiments to Conduct, Ideas to Try, Vendors to Contact, Updated Schedule, etc.

-Hopefully we will have implemented all colors we want our application to be able to identify and have started text recognition in the coming week.

Thursday, January 28, 2016

Progress Report Due: 1/24/16

Progress:

*No progress report was posted the week of 1/17 since the week was a presentation week for Progress Reports.

*No progress report was posted the week of 1/17 since the week was a presentation week for Progress Reports.

Tasks Accomplished:

- Last week we began implementing a code into our app that converts what the camera sees from BGR to HSV vision. What the code is supposed to do, is mask what the camera sees, leaving one specific color that has been tracked, singled out, and all surrounding objects blacked. We plan to work with Mr. Lin in the upcoming STEM Seminar during Regents Week to complete this task.

Problem:

Difficulties Encountered:

-Our project on Xcode has been able to build itself, but it's still not accomplishing what we want it to do (stated above).

Equipments Required:

-Mac Laptops

-Mr. Lin's iPad & Chord

- Plan:

Proposals/Steps to Attack the Problems/Action Items for the Coming Week:

-Get our application to mask what it sees, singling out the color of our choice (whichever color(s) we place in the code).

- Alter the app so that it will detect the color of our choice so we can begin testing it out on subway signs.

Experiments to Conduct, Ideas to Try, Vendors to Contact, Updated Schedule, etc.

-Have the code successfully mask what the camera sees by the conclusion of our STEM Seminar. Hopefully we will have implemented all colors we want our application to be able to identify by the end of tomorrow's seminar as well.

Monday, January 18, 2016

Progress Meeting II Materials (1/12/16)

Slides:

https://docs.google.com/presentation/d/1HoOAw7tc3PbIurVUCWAieI4PzeHyTBee_94TQlBtrPo/edit#slide=id.p

Gantt Chart:

https://drive.google.com/drive/my-drive

https://docs.google.com/presentation/d/1HoOAw7tc3PbIurVUCWAieI4PzeHyTBee_94TQlBtrPo/edit#slide=id.p

Gantt Chart:

https://drive.google.com/drive/my-drive

Sunday, January 10, 2016

Progress Report Due: 1/10/16

Progress:

Tasks Accomplished:

-We have successfully implemented a code into our app that converts what the camera sees from BGR to HSV vision, aside from a few minor errors that can be solved within the coming week. Essentially, we changed the default "BGR to Grayscale" of Mr. Lin's code, so that the camera would take footage and alter it from "BGR to HSV."

Questions Answered:

-How we will have our app single out certain objects. Converting from BGR to HSV allows for the app's camera to track objects by extracting whichever colors we implement in our code.

Lessons Learned:

-We won't be able to find an exact code for each capability we want our app to have, but rather we can take bits and pieces and learn from them to create what we need to accomplish.

Problem:

Difficulties Encountered:

-Part of an online code that worked well with our app (http://docs.opencv.org/master/df/d9d/tutorial_py_colorspaces.html#gsc.tab=0) was not compatible with our app. This caused us to have to go in and alter the code to meet our needs (which we had expected and expect to do in the future).

Equipments Required:

-Mac Laptops

-Mr. Lin's iPad & Chord

Show Stopper/Open Issues/New Risks:

- The code we have been working off of is configured to identify red objects. We have to look more into ranges of color features (such as hue/saturation/etc.) which is where HSV plays in.

- Plan:

Proposals/Steps to Attack the Problems/Action Items for the Coming Week:

- Fix any minor malfunctions that occurred while switching the app from "BGR to Grayscale" to "BGR to HSV".

- Alter the app so that it will detect the color of our choice so we can begin testing it out on subway signs.

Experiments to Conduct, Ideas to Try, Vendors to Contact, Updated Schedule, etc.

-Continue to make sure our app works off of Mr. Lin's iPad as we add new code.

-Detect different colored objects as we alter our code to make sure the camera is tracking the correct objects.

Subscribe to:

Comments (Atom)